It doesn’t matter where you work in an IT organization; there are likely three common words (or phrases) in your everyday discussions – complexity, change, and artificial intelligence (AI). You might have already read or heard lots about these three areas via your usual IT service management (ITSM) channels, but what about getting alternative views?

I was lucky enough to talk with Dave Snowden, Founder of The Cynefin Company, who covered all three. Here are some of the highlights, interpreting elements of what Dave shared in our chat – I bet much of it differs from what you usually hear (or read).

Understand that idea commodification signals decline

One of the signs that an idea is coming to the end of its life cycle is commodification. For example, the minute SAFE came along in the Agile movement, we knew Agile was over because SAFE commodified the space. It took some years, but you can now see what’s happened.

Organizations can’t predict such change but can develop early warning systems to anticipate shifts before they happen (although you can’t predict the timing). We can create training sets, data sets based on previous movements, and we can alert executives to the plausibility that a change might be about to happen, and that’s before they would see it with conventional scanning.

You can’t model a complex system

Instead, you have to stimulate or simulate it, and that’s the problem with things like Value Stream Mapping. Viable systems model because they come from a period where you thought you constructed a model.

Now we understand complexity. We know there are so many variables (with complexity) that no model will actually work. So you have to stimulate or simulate the system until you see patterns, and then you can start to use prediction. It’s a big switch.

You need to understand how people make decisions

In the 90s, Peter Senge’s learning organization brought the concept of a common mission, common goals, and common purpose. Every few years, we put a different name on the same platitudes on the same flip charts. It was mission, then it became values, then it became purpose.

The idea was that you control uncertainty by all having a common goal or a “North Star,” and then everybody aligns behind it. Now, that isn’t the way it works. If you want to understand how people make decisions, they make decisions based on two factors, who they’re talking with (proximity really matters for humans) and you need to recognize two other things:

- Affordances – this is from Gibson’s concept, what is afforded to people by the environment they’re in? For example, having a strategic goal to grow cactuses is stupidity if you live in a paddy field. People too often start with where they would like to be, and they don’t, first of all, understand where they are and what’s possible.

- Assemblage – where we surround ourselves with people who tell similar stories. What happens is that those stories build and accumulate, and they gain a type of material reality. A story is not a social construct anymore. It exists independently of the storyteller, creating downward causality, and people can’t escape those story patterns.

Be careful with the wisdom of crowds

There are three conditions for the wisdom of crowds to work. First, people must have tacit knowledge of the field. Second, none of them must talk with anybody else or see what the other person’s guess is. Third, it must be a game with no personal stake in the outcome.

There are some scientific reasons behind this. If you have a lot of independent agents making a decision, the results form a Gaussian distribution. However, the minute they talk, the results form a creative distribution. So you don’t get a clear decision.

Basically, the dominant voices start to control what is interpreted. Power comes in. So if we’re doing wisdom of crowds, we want every individual to index the material without talking to every other individual, then there’s statistical validity.

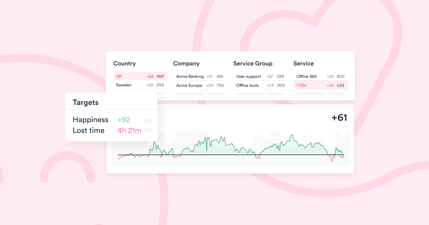

In a complex system, measure vectors, not outcomes

If you have an outcome, you focus on the outcome measure. These include key performance indicators (KPIs) or objectives and key results (OKRs). However, all you do is create perverse incentives to achieve the target. For example, in a call center, people will close a ticket and reopen it to achieve a target. It’s gaming behavior.

Whereas a vector measures the direction of travel, velocity of travel, and energy consumption. We can do that, and then we can say, “Well, you did get more stories like this and fewer stories like those.” Therefore, that’s a target.

AI, prejudice, and our loss of judgement

AI makes decisions inductively and is dependent on its training data sets. So, if you’re allowing AI to trawl the web, it will reinforce the prejudices of the past. It’s not going to see novelty.

Human beings evolved to think abductively, not inductively. So, we evolved to make decisions without training data, and the danger is you will lose that capacity with AI. We’ve now got strong evidence that if people use AI, they lose the capacity to exercise judgment, particularly moral judgment.

AI works off its training data sets. It’s determined by its training data sets. If you use AI, you should design the training data sets. You shouldn’t rely on the LLMs because they’ve been trained in whatever could be scraped from whatever sources.

You should also realize that the carbon consumption of LLMs is huge. I mean, they’re the biggest contributor to global warming at the moment. Everybody’s focused on language interpretation when more sophisticated AI methods are available to us, but the human brain works off, I think, four megawatts, and it does more. You’ve got to ensure you don’t replace that capacity.

To hear more about what Dave has to say about complexity, change, and AI (and other things), watch his episode of our IT Experience Podcast.