What do you think about the value, and appropriateness, of IT service desk service level agreements (SLAs)? Are you still heavily reliant on traditional SLAs for understanding your service desk’s performance?

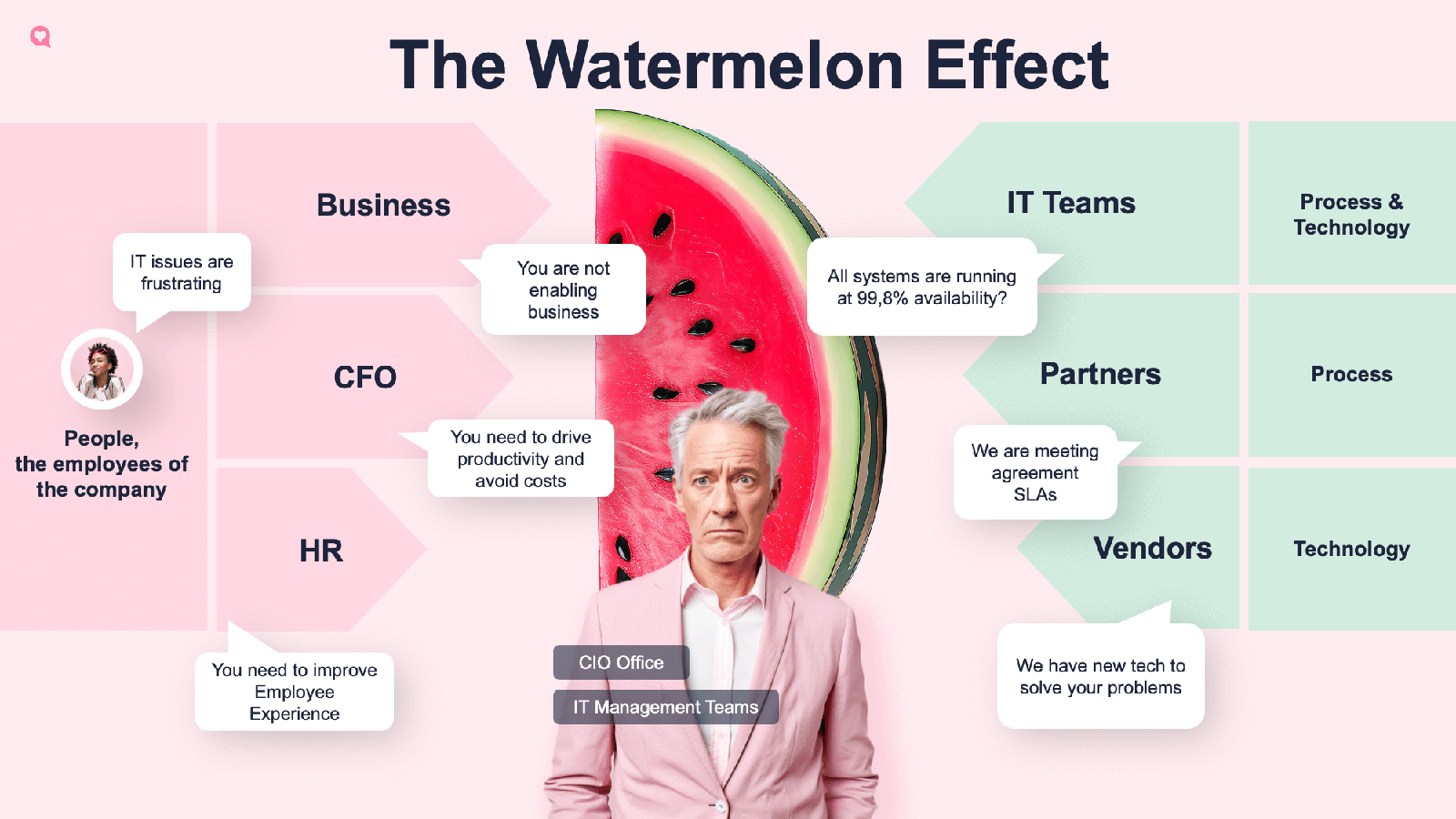

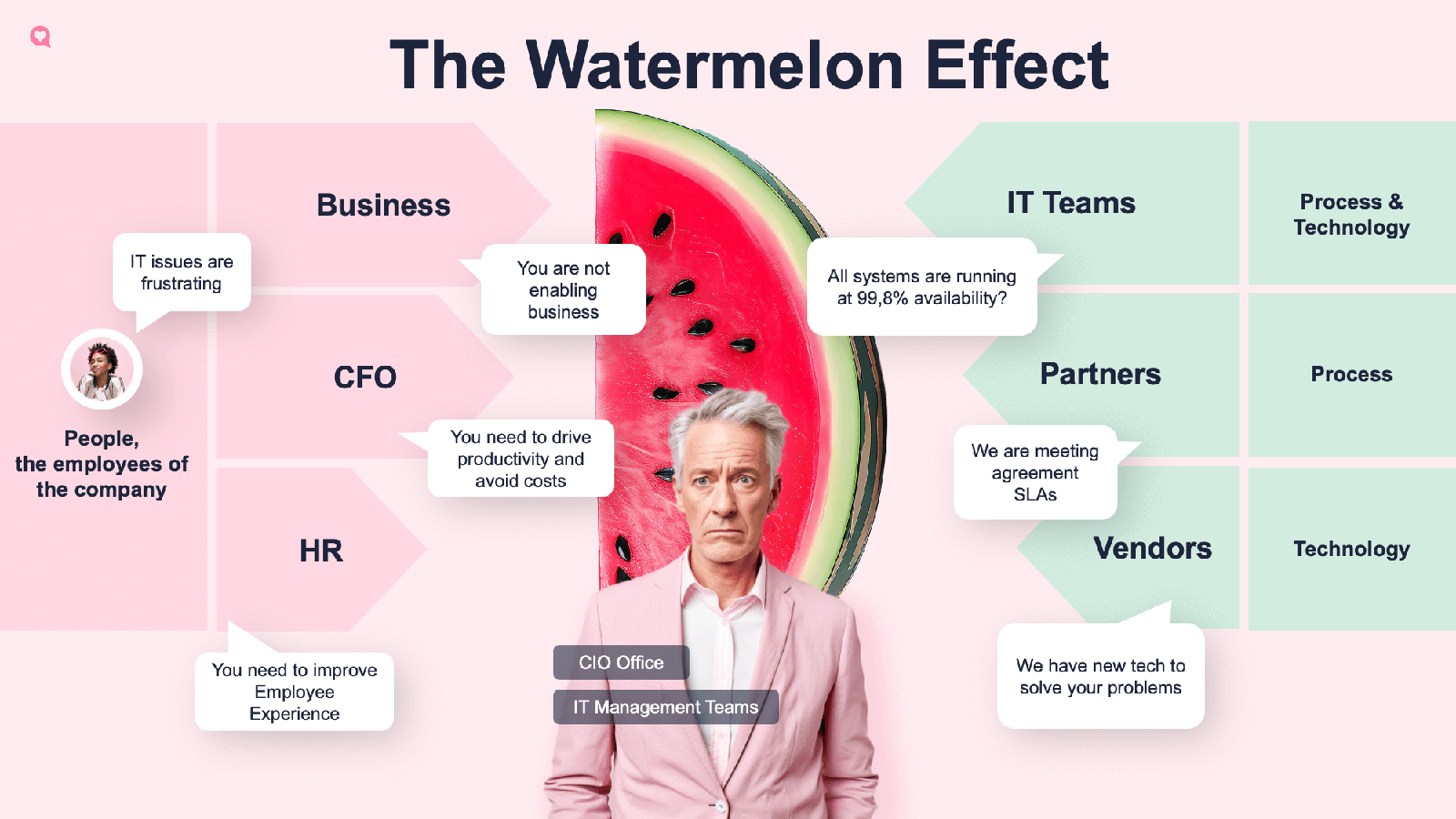

The “watermelon effect” or “watermelon service level agreements (SLAs)” are two of the most used phrases when talking about experience management for IT support. They refer to situations where service level targets are being met, but there’s still end-user unhappiness with the IT service delivery and support they receive. So, like a watermelon, the reported performance is visibly green, but underneath, there’s red. It’s considered a common IT support issue, but where’s the proof?

To help, this blog uses aggregated HappySignals customer data examples to look beyond the anecdotal evidence to prove that the “watermelon effect” is a real issue affecting IT service desks and broader IT operations.

The “green” of traditional service-level targets

Most IT organizations produce a monthly reporting pack that’s shared with key IT and business stakeholders. These might include the odd missed target and the associated improvement actions, but in the main, they can be considered “seas of green.” With the IT service provider, whether internal or outsourced, meeting most of their agreed targets. Performance is considered good, perhaps great.

However, the all-too-frequent C-level conversations about technology horror stories offer a different view, with some employees dissatisfied with the corporate IT service delivery and support capabilities. This is the red hidden below the green when only traditional IT metrics are used to measure what IT thinks is important.

But can this “watermelon effect” be proved? The short answer is “yes,” with specific proof point examples shared below.

Real-world examples of the “watermelon effect”

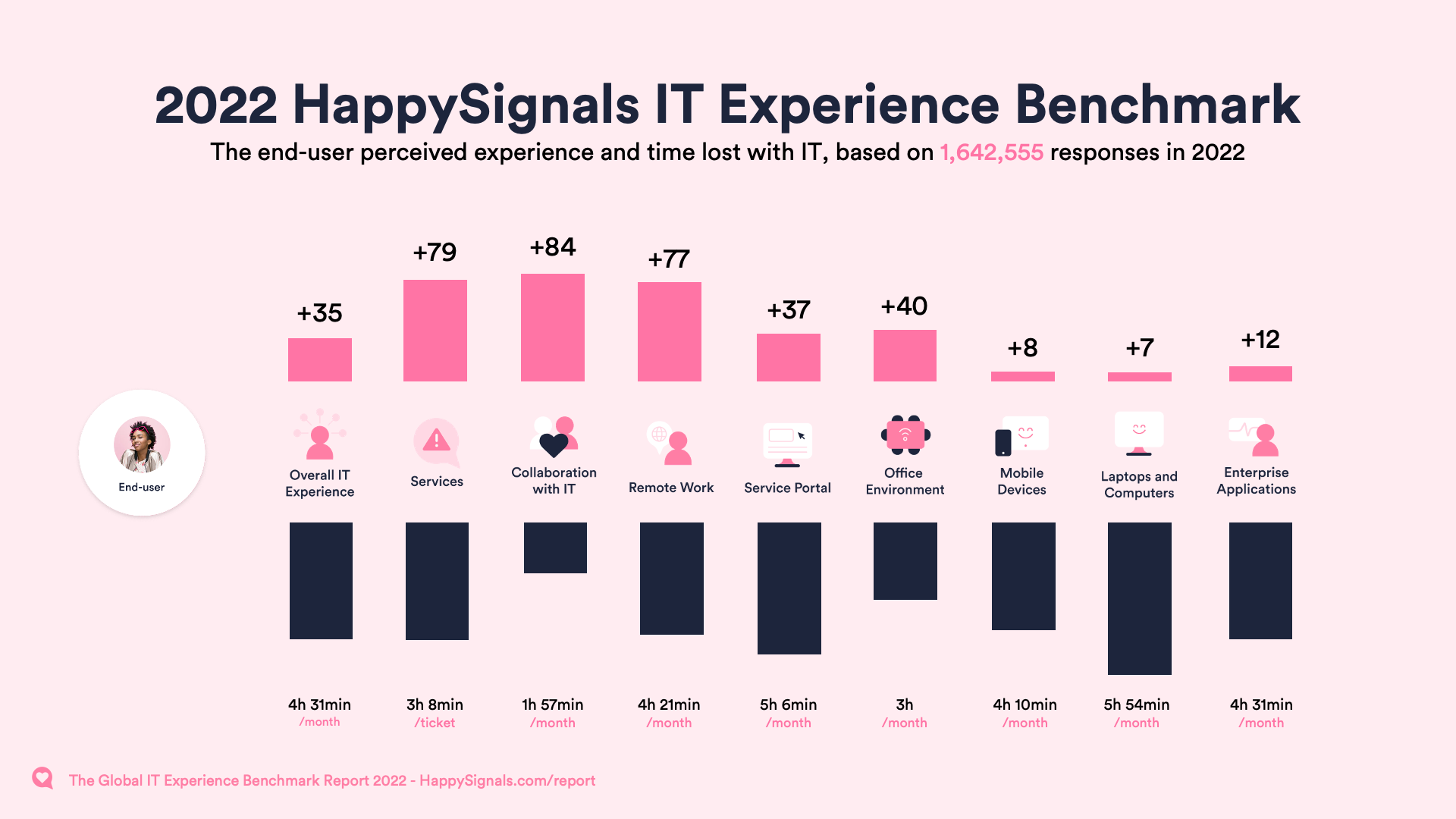

The following examples are taken from the 9th Global IT Experience Benchmark Report: H2/2022, created from 1,642,555 end-user responses in 2022 from over 130 countries. The data comes from continuous surveys related to:

- Ticket-based IT (incidents and service requests). End-user responses are collected immediately after tickets are resolved, with a 25-30% response rate.

- Proactive IT. Surveys are sent proactively to end-users about areas such as the Overall IT Experience, Enterprise Applications, Laptops and Computers, Remote Work, and Office Environment).

Data is collected in three areas:

- Happiness – end-users rate, on a scale from 0-10, how happy they are with the IT area being measured

- Productivity – end-users estimate how much work time they lost due to the IT area being measured

- Factors – end-users select from a list of suggested reasons influencing their Happiness rating; multiple factors can be chosen.

Finally, it’s important to appreciate that the data is from HappySignals customers who are at different points in their experience improvement journeys, with the data likely to be significantly better than for organizations with limited or no insight into “the red of the watermelon.”

Example #1: Issues with IT touchpoints

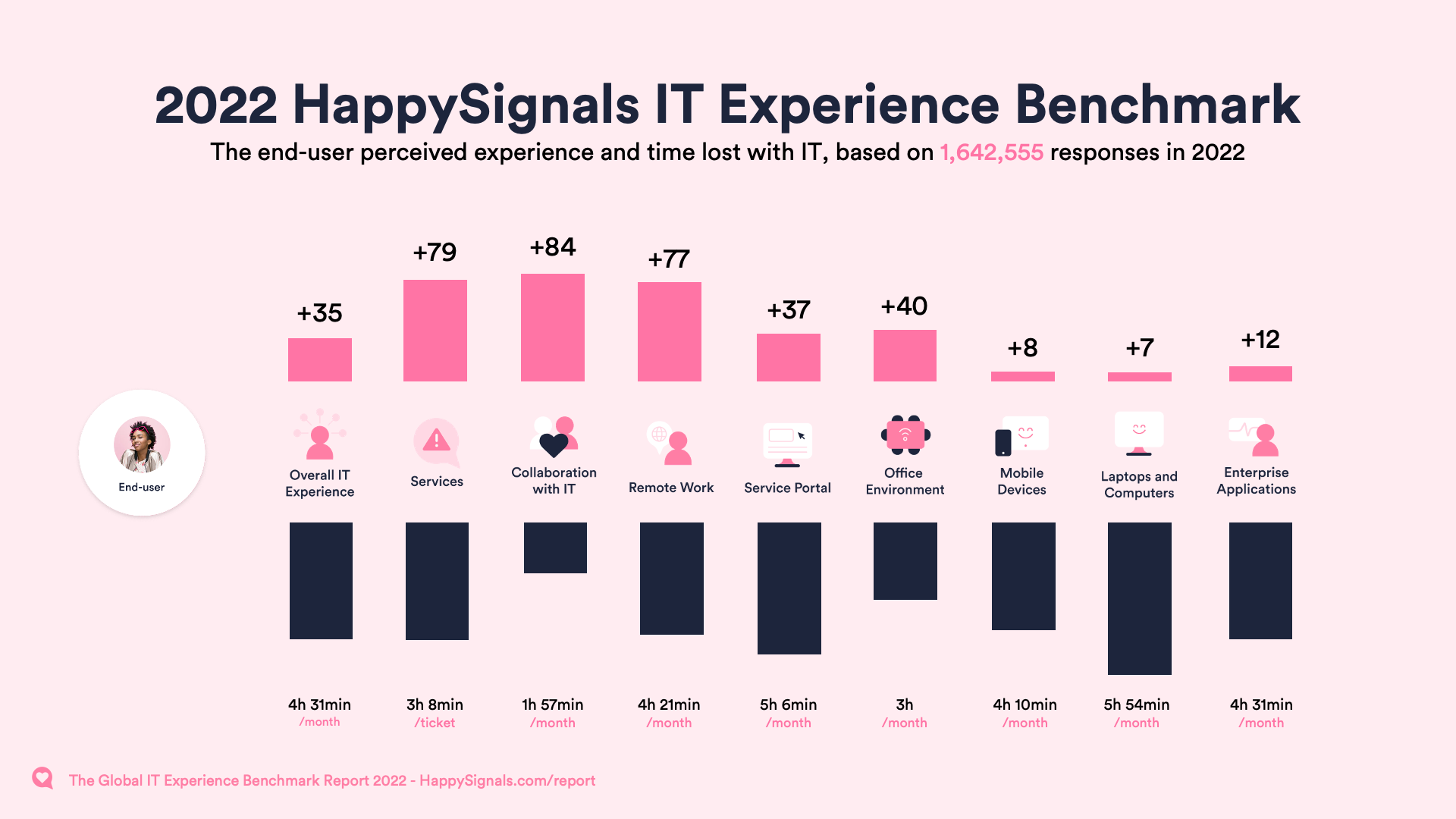

The Proactive IT experience data shows end-users have mixed views of different IT capabilities. As shown in the graphic below, end-users are more unhappy with their corporate devices and enterprise applications than with other aspects of IT performance. They also lose a significant amount of productivity each month because of these.

If an SLA-focused organization actually has insight across these areas, they still might not understand the issues – because of the likely green performance of their operationally-focused metrics and targets. Quite simply, all looks well with corporate devices, enterprise applications, and other areas despite the end-user issues – your organization sees the green but is oblivious to the red “inside the watermelon.”

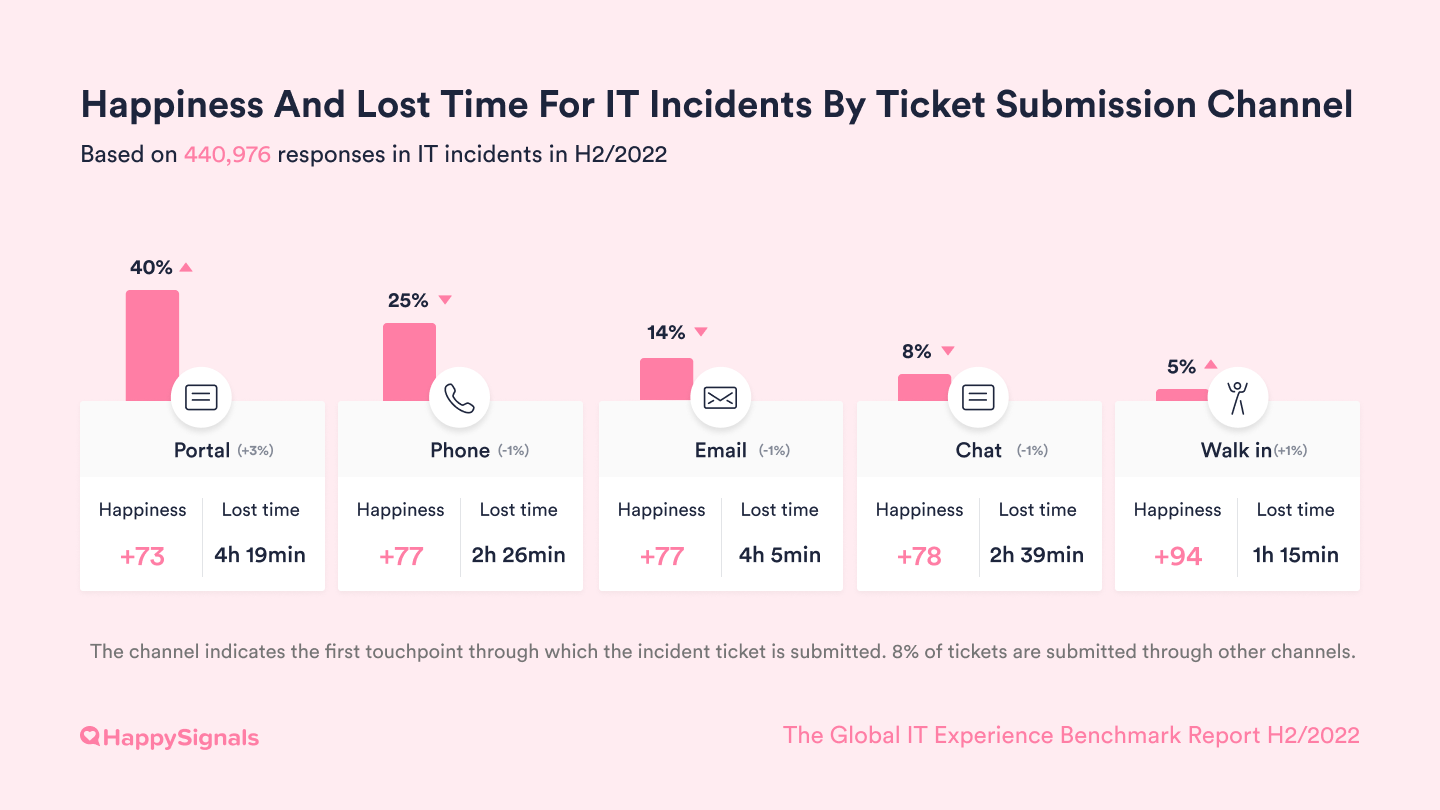

Example #2: Issues with IT support channels

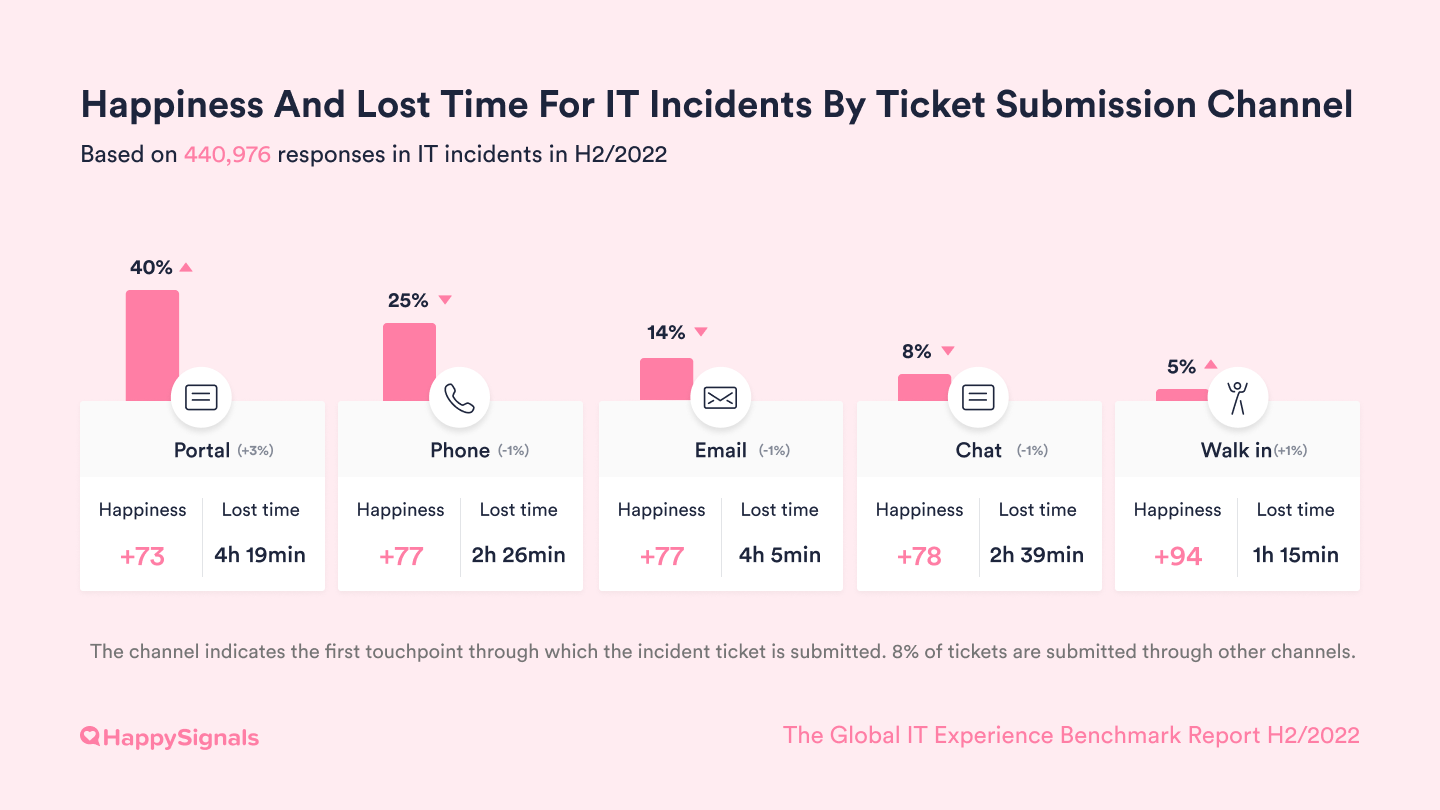

Do you know if your IT self-service portal delivers the promised benefits of being a “better, faster, cheaper” support channel? The graphic below shows that while the portal is the most used IT support channel, it offers the poorest employee experience (Happiness is +73). Additionally, employees perceive themselves to lose significantly more time (and productivity) with the portal than the phone channel – nearly two hours per ticket.

Traditional metrics might show the positive trend of greater portal use but completely miss its adverse impact on business operations and IT’s reputation. Again, your organization likely sees the green but is oblivious to the red “inside the watermelon.”

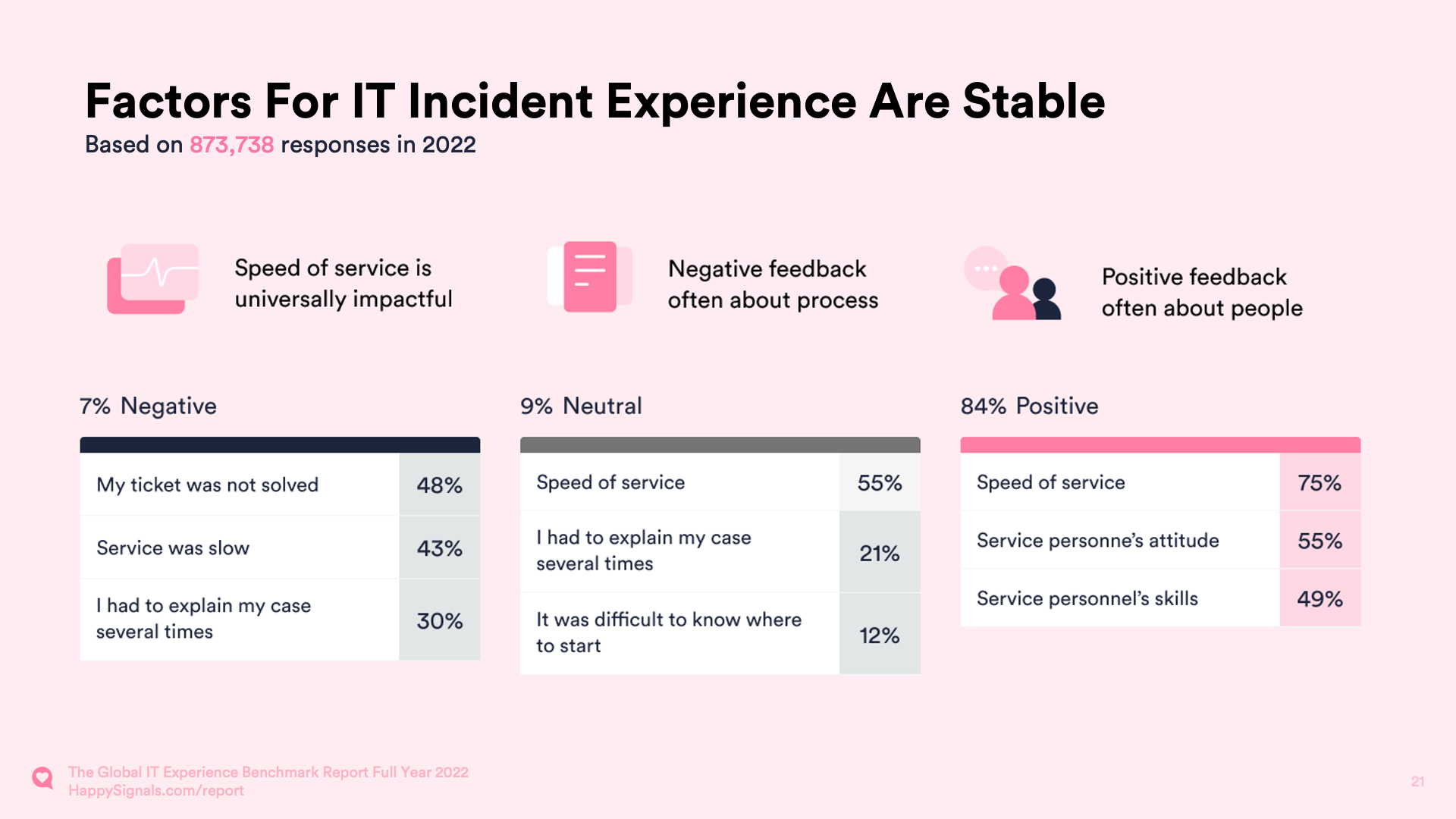

Example #3: The factors causing poor end-user experiences

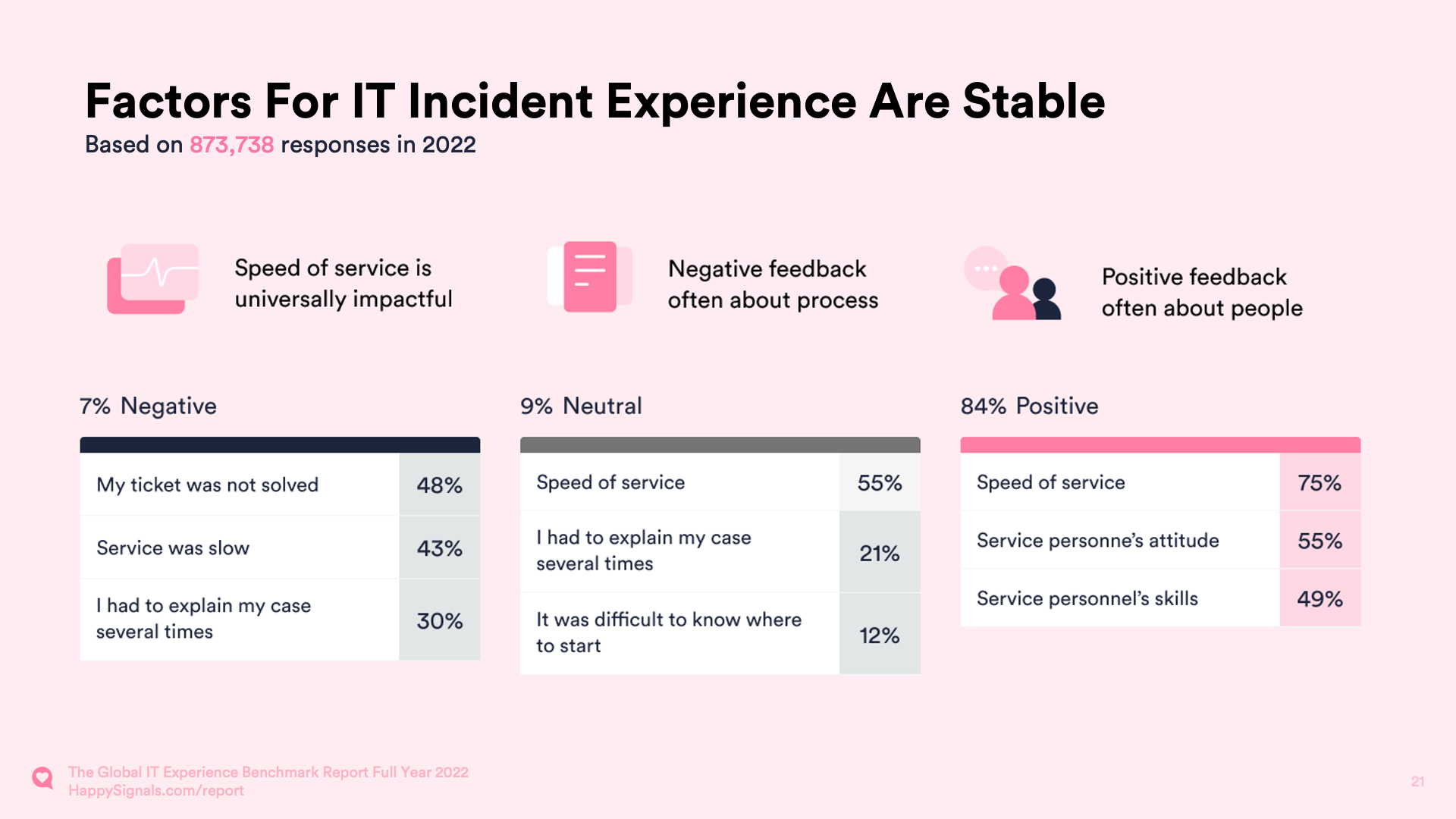

While traditional IT supports likely hide many of the issues your end-users face, the factors causing negative experiences are an extra level of insight that’s missed. The graphic below shows the key factors that result in positive, neutral, and negative incident management experiences.

Your IT service desk probably knows what causes positive experiences, especially given the long-held focus on efficiency and recent interest in delivering better experiences. However, the causes of neutral and negative feedback are likely unknown, at least beyond “gut feelings.”

Two of the key factors for negative experiences should be a concern for any IT service desk – “My ticket was not resolved” and “I had to explain my case several times.” Your organization’s CSAT capabilities might highlight some negative end-user experiences, but how do they pinpoint the leading causes of end-user issues? Plus, the SLA metrics might again be green, with your IT organization oblivious to the red “inside the watermelon.”

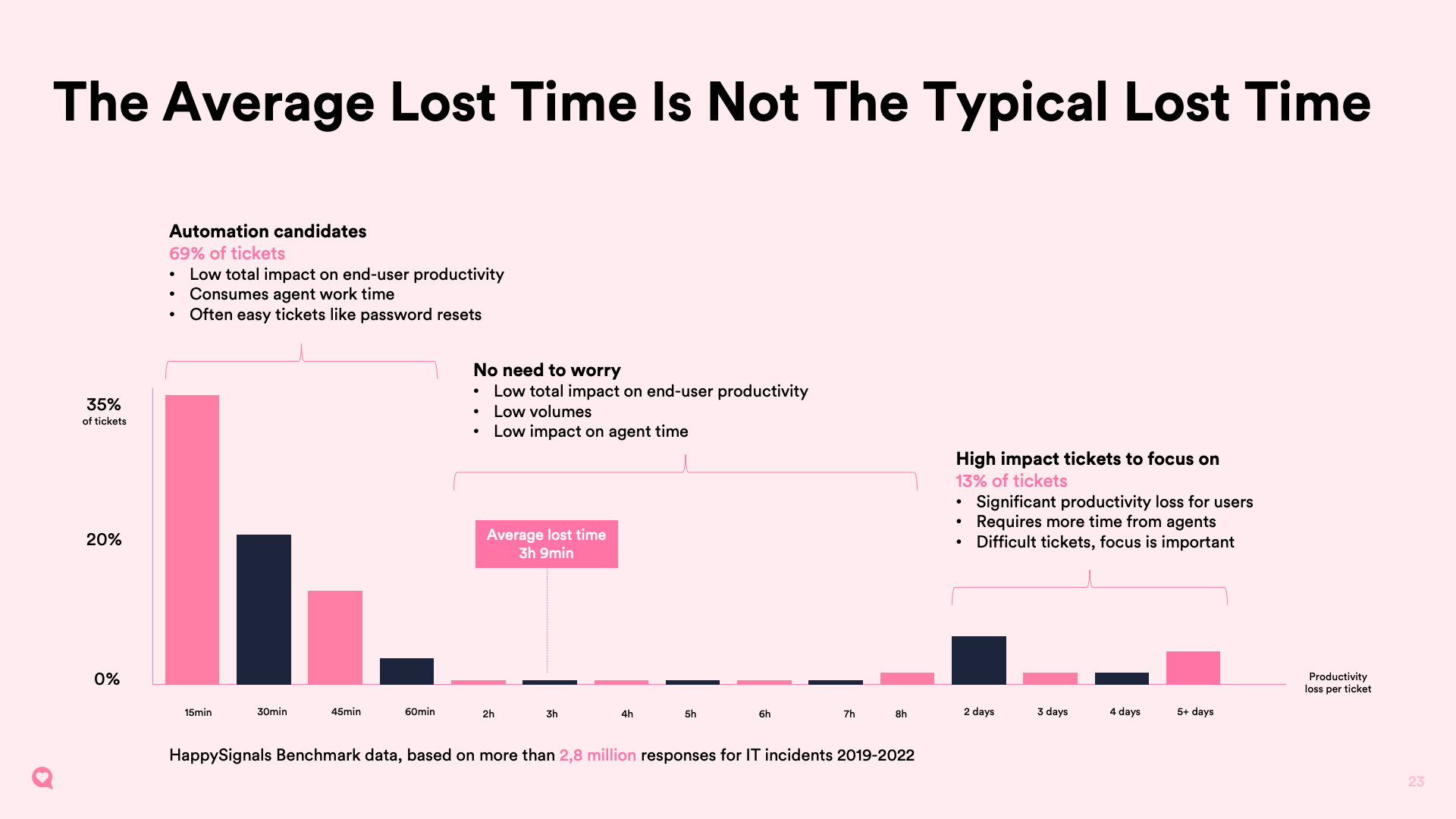

Example #4: Lost employee time and tickets

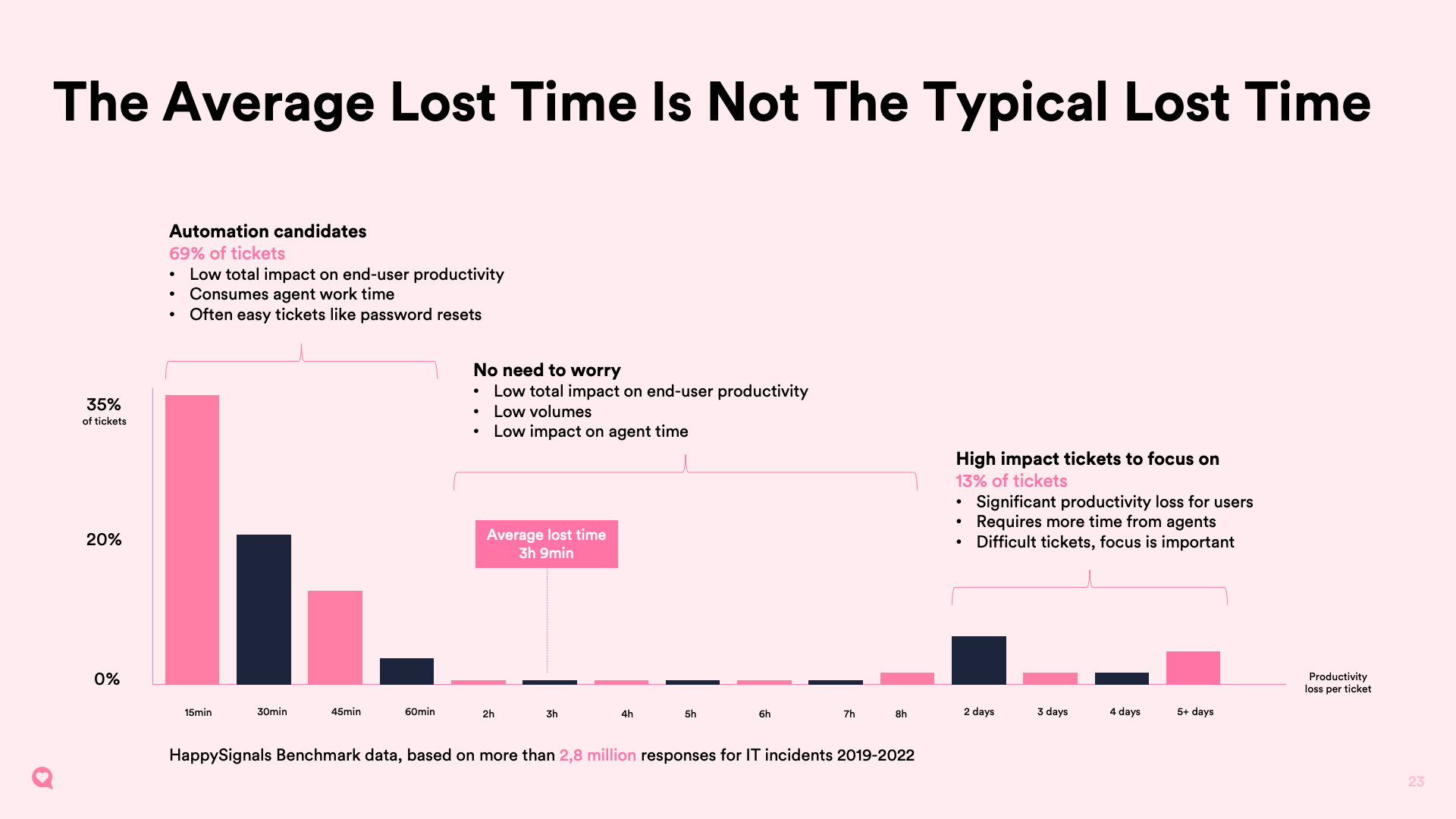

Most IT service desks monitor their aged tickets. Perhaps having a regular purge to reduce their level. But what other insight does your IT organization have about tickets causing more end-user issues than others?

The graphic below shows the distribution of end-user lost time with tickets. The headline statistic is that “80% of employees perceived lost time comes from only 13% of incident tickets.” However, this graphic not only shows how the 13% of tickets adversely affect end-user productivity but also that the average lost time of 3 hours and 9 minutes per ticket is not the typical lost time.

This granular insight allows IT support teams to move away from a focus on the average lost time, if known, or all tickets to better understand the incident types and their root causes that lose end-users so much time and productivity.

How Human-Centric IT helps

Hopefully, these four examples – and you can visit the IT Experience Benchmark Report webpage for more – are data-based proof that the “watermelon effect” exists. They show how – even for organizations already focused on improving experiences – experience data and XLA targets can highlight end-user-felt issues that go unnoticed with traditional IT support metrics.

The experience data provides insight into the red below the green skin of the watermelon, often along with the probable root causes. But just knowing that there are issues isn’t enough. Instead, the data is simply the starting point for making changes in the right places.

For more data and insights, please about Human-Centric IT Experience Management